Probability Distributions > Stable Distribution

What is the Stable Distribution?

While Paul Lévy’s 1925 description of Stable Distributions has granted them the nickname ‘Lévy distributions’, it is actually a misnomer as this simply refers to one specific member within the broader family. Nonetheless, each and every stable distribution exhibits certain shared properties that make them useful partners in probability calculations.

The Cauchy, Lévy and Normal distributions stand out from the pack of other strange-shaped curves due to their distinct probability density functions. However, these oddball graphs all have similarities in common such as skewness or heavy tails – characteristics that help shape our understanding of probabilities.

Properties of Stable Distributions

The general stable distribution has four parameters (Barndorff-Nielsen et. al):

- Index of stability: α ∈ (0,2). Probability in extreme tails; Cauchy distribution = α = 1.

- Skewness parameter: β. If β = 0, then the distribution is symmetrical. A normal distribution has β = 0.

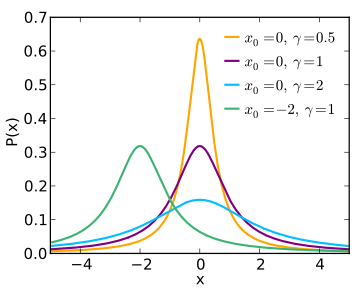

- Scale parameter: γ. A measure of dispersion. For the normal distribution, γ = 1/2 population variance.

- Location parameter: δ = median. When α > 1,= the mean.

Alpha Stable Distribution

The alpha stable distribution is a versatile family of distributions that can be specified by four parameters, α, β, γ, and δ. These control the characterisitic exponent which determines how long the tails are; skewness to decide if its right or left skewed; scale parameter for variance similar in normal distribtion; and location shift for mean, respectively. It’s all packaged up nicely into one powerful formula with (α, β, γ, δ). An interesting way to think about it – when expressed as standard stable random variable, any other values than these will conform to this same equation through scaling/shifting combinations.

Advantages and Disadvantages

Stable distributions, including the alpha stable distribution, can be invaluable for describing data behavior, but their lack of moments beyond α limits the usefulness of any theory based on variance. Thankfully, it’s possible to modify these distributions by truncating the tails to better model real-world scenarios; however such modifications require a deeper understanding not only of how each distribution functions but also in what instances they should and shouldn’t be used – something that couldn’t have been anticipated without an explicit knowledge related specialists’ field. As Paul & Baschnagel illustrate concerning heartbeats measurements: if we are aware that large deviations aren’t physically feasible then tail truncation provides us with a more accurate model than would otherwise exist.

References:

Fama, E.F. and Roll, R. (1968). Some Properties of Symmetric Stable Distributions.

Paul Lévy(1925). Calul des probabilities.

Ole E. Barndorff-Nielsen, Thomas Mikosch, Sidney I. Resnick (2001). Lévy Processes: Theory and Applications

Jitendar S. Mann, Richard G. Heifner, United States. Dept. of Agriculture. Economic Research Service. The distribution of shortrun commodity price movements, Issues 1535-1538. U.S. Dept. of Agriculture, Economic Research Service, 1976 – Business & Economics – 68 pages. Free ebook.

Wolfgang Paul, Jörg Baschnagel. Stochastic Processes: From Physics to Finance.