A tuning parameter (λ), sometimes called a penalty parameter, controls the strength of the penalty term in ridge regression and lasso regression. It is basically the amount of shrinkage, where data values are shrunk towards a central point, like the mean. Shrinkage results in simple, sparse models which are easier to analyze than high-dimensional data models with large numbers of parameters.

- When λ = 0, no parameters are eliminated. The estimate is equal to the one found with linear regression.

- As λ increases, more and more coefficients are set to zero and eliminated.

- When λ = ∞, all coefficients are eliminated.

There is a trade-off between bias and variance in resulting estimators. As λ increases, bias increases and as λ decreases, variance increases. For example, setting your tuning parameter to a low value results in a more manageable number of model parameters and lower bias, but at the expense of a much larger variance.

L1 and L2 Penalties

Tuning parameters are part of a process called regularization, which works by biasing data towards particular values. Popular regularization methods use either an L1 or L2 penalty (or sometimes, a combination of both):

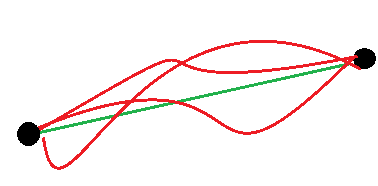

- L1 penalties limits the size of the coefficients and can result in sparse models (i.e. models with a small number of coefficients); Some coefficients are eliminated.

- L2 penalties do not result in sparse models because all coefficients are shrunk by the same factor and none are eliminated.

How to Choose a Tuning Parameter

Choosing a tuning parameter is a challenging task. Optimal tuning parameters are “difficult to calibrate in practice” (Lederer and Müller, 2015) and are “not practically feasible” (Fan & Tang (2013). They depend on a quagmire of hard-to-quantify parameters like nuisance parameters in the population model. Specific techniques have their proponents and opponents, making the task even more difficult. For example, Tibshirani calls cross validation (a somewhat popular method for finding tuning parameters) “…a simple, intuitive way to estimate prediction error“, while Chand (n.d.) states the method “almost always fail[s] to achieve consistent variable selection”.

Although there isn’t an “optimal” tuning parameter for any particular scenario, finding one is necessary for any analysis involving high-dimensional data. Fan & Tang recommend:

- Choose a regularization method. For example:

- Lasso regression (L1)

- Ridge Regression (L2)

- Smoothly clipped absolute deviation (SCAD)

- Elastic net (a combination of L1 and L2)

- Adaptive lasso

- Use a sequence of tuning parameters to create a series of different models.

- Study the different models and select one that best fits your needs. Various methods for model selection exist, including: Mallow’s Cp, Akaike’s Information Criterion (AIC) and Bayesian Information Criterion (BIC).

Although the concept sounds simple (choose a method, then choose a model), it doesn’t work too well in some cases. For example, the number of models becomes unwieldy when the dimensionality p grows exponentially along with the sample size. When this happens, Fang and Tang (2013) note that “To the best of our knowledge, there is no existing work accommodating tuning parameter selection for general penalized likelihood methods.”

References:

Chand, S. (n.d.). On Tuning Parameter Selection of Lasso-Type Methods – A Monte Carlo Study. Proceedings of 2012 9th International Bhurban Conference on Applied Sciences & Technology (IBCAST) 120 Islamabad, Pakistan, 9th – 12th January, 2012Retrieved 8/14/2017 from: http://www.cmap.polytechnique.fr/~lepennec/enseignement/M2Orsay/06177542.pdf.

Fang, Y and Tang C (2013). Tuning parameter selection in high dimensional penalized likelihood. J. R. Statist. Soc. B, 75, Part 3, pp. 531–552. Retrieved 8/14/2017 from: http://www-bcf.usc.edu/~fanyingy/publications/JRSSB-FT13.pdf

Kotz, S.; et al., eds. (2006), Encyclopedia of Statistical Sciences, Wiley.

Lederer and Müller (2015). Don’t Fall for Tuning Parameters: Tuning-Free Variable Selection in High Dimensions With the TREX. Retrieved August 14, 2017 from: https://arxiv.org/abs/1404.0541

Tibshirani, R. (2013). Model selection and validation 1: Cross-validation. PPT. Retrieved 8/14/2017 from: http://www.stat.cmu.edu/~ryantibs/datamining/lectures/18-val1.pdf