Regression Analysis > Regularization

What is Regularization?

Regularization is a way to avoid overfitting by penalizing high-valued regression coefficients. In simple terms, it reduces parameters and shrinks (simplifies) the model. This more streamlined, more parsimonious model will likely perform better at predictions. Regularization adds penalties to more complex models and then sorts potential models from least overfit to greatest; The model with the lowest “overfitting” score is usually the best choice for predictive power.

Why is Regularization Necessary?

Regularization is necessary because least squares regression methods, where the residual sum of squares is minimized, can be unstable. This is especially true if there is multicollinearity in the model. However, the mere practice of model fitting comes with a major pitfall: any set of data can be fitted to a model, even if that model is ridiculously complex.

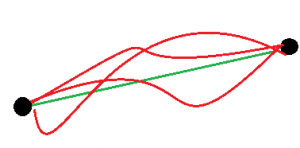

For example, take a simple data set of two points. The simplest model is a straight line through the two points, or a first degree polynomial. However, an infinite number of other models could also fit second degree, third degree polynomials—and so on.

Fitting a small amount of data will often lead to a complex, overfit model. A simpler model may be underfit and will perform poorly with predictions. Just because two data points fit a line perfectly doesn’t mean that a third point will fall exactly on that line — in fact, it’s highly unlikely. Simply put, regularization penalizes models that are more complex in favor of simpler models (ones with smaller regression coefficients) — but not at the expense of reducing predictive power.

Penalty Terms

Regularization works by biasing data towards particular values (such as small values near zero). The bias is achieved by adding a tuning parameter to encourage those values:

- L1 regularization adds an L1 penalty equal to the absolute value of the magnitude of coefficients. In other words, it limits the size of the coefficients. L1 can yield sparse models (i.e. models with few coefficients); Some coefficients can become zero and eliminated. Lasso regression uses this method.

- L2 regularization adds an L2 penalty equal to the square of the magnitude of coefficients. L2 will not yield sparse models and all coefficients are shrunk by the same factor (none are eliminated). Ridge regression and SVMs use this method.

- Elastic nets combine L1 & L2 methods, but do add a hyperparameter (see this paper by Zou and Hastie).

References

Bühlmann, Peter; Van De Geer, Sara (2011). “Statistics for High-Dimensional Data“. Springer Series in Statistics