Statistics Definitions > Multicollinearity

What is Multicollinearity?

Multicollinearity can adversely affect your regression results.

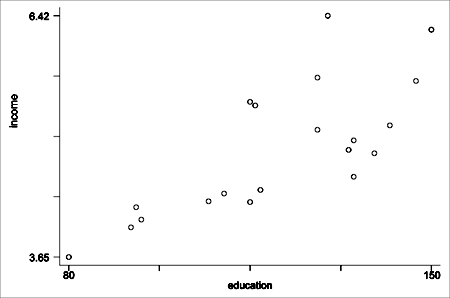

Multicollinearity generally occurs when there are high correlations between two or more predictor variables. In other words, one predictor variable can be used to predict the other. This creates redundant information, skewing the results in a regression model. Examples of correlated predictor variables (also called multicollinear predictors) are: a person’s height and weight, age and sales price of a car, or years of education and annual income.

An easy way to detect multicollinearity is to calculate correlation coefficients for all pairs of predictor variables. If the correlation coefficient, r, is exactly +1 or -1, this is called perfect multicollinearity. If r is close to or exactly -1 or +1, one of the variables should be removed from the model if at all possible.

It’s more common for multicollineariy to rear its ugly head in observational studies; it’s less common with experimental data. When the condition is present, it can result in unstable and unreliable regression estimates. Several other problems can interfere with analysis of results, including:

- The t-statistic will generally be very small and coefficient confidence intervals will be very wide. This means that it is harder to reject the null hypothesis.

- The partial regression coefficient may be an imprecise estimate; standard errors may be very large.

- Partial regression coefficients may have sign and/or magnitude changes as they pass from sample to sample.

- Multicollinearity makes it difficult to gauge the effect of independent variables on dependent variables.

What Causes Multicollinearity?

The two types are:

- Data-based multicollinearity: caused by poorly designed experiments, data that is 100% observational, or data collection methods that cannot be manipulated. In some cases, variables may be highly correlated (usually due to collecting data from purely observational studies) and there is no error on the researcher’s part. For this reason, you should conduct experiments whenever possible, setting the level of the predictor variables in advance.

- Structural multicollinearity: caused by you, the researcher, creating new predictor variables.

Causes for multicollinearity can also include:

- Insufficient data. In some cases, collecting more data can resolve the issue.

- Dummy variables may be incorrectly used. For example, the researcher may fail to exclude one category, or add a dummy variable for every category (e.g. spring, summer, autumn, winter).

- Including a variable in the regression that is actually a combination of two other variables. For example, including “total investment income” when total investment income = income from stocks and bonds + income from savings interest.

- Including two identical (or almost identical) variables. For example, weight in pounds and weight in kilos, or investment income and savings/bond income.

Next: Variance Inflation Factors.

References

Beyer, W. H. CRC Standard Mathematical Tables, 31st ed. Boca Raton, FL: CRC Press, pp. 536 and 571, 2002.

Dodge, Y. (2008). The Concise Encyclopedia of Statistics. Springer.

Klein, G. (2013). The Cartoon Introduction to Statistics. Hill & Wamg.

Vogt, W.P. (2005). Dictionary of Statistics & Methodology: A Nontechnical Guide for the Social Sciences. SAGE.