What is a Bernoulli Distribution?

A Bernoulli distribution is a discrete probability distribution for a Bernoulli trial — a random experiment that has only two outcomes (usually called a “Success” or a “Failure”). For example, the probability of getting a heads (a “success”) while flipping a coin is 0.5. The probability of “failure” is 1 – P (1 minus the probability of success, which also equals 0.5 for a coin toss). It is a special case of the binomial distribution for n = 1. In other words, it is a binomial distribution with a single trial (e.g. a single coin toss).

Watch the video for the definition and how to find the PDF, variance, expected value and probabilities:

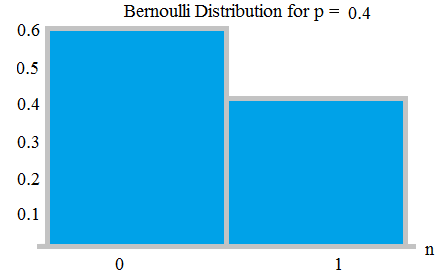

The probability of a failure is labeled on the x-axis as 0 and success is labeled as 1. In the following Bernoulli distribution, the probability of success (1) is 0.4, and the probability of failure (0) is 0.6:

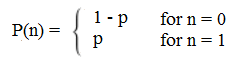

The probability density function (pdf) for this distribution is px (1 – p)1 – x, which can also be written as:

The expected value for a random variable, X, for a Bernoulli distribution is:

E[X] = p.

For example, if p = .04, then E[X] = 0.04.

The variance of a Bernoulli random variable is:

Var[X] = p(1 – p).

What is a Bernoulli Trial?

A Bernoulli trial is one of the simplest experiments you can conduct. It’s an experiment where you can have one of two possible outcomes. For example, “Yes” and “No” or “Heads” and “Tails.” A few examples:

- Coin tosses: record how many coins land heads up and how many land tails up.

- Births: how many boys are born and how many girls are born each day.

- Rolling Dice: the probability of a roll of two die resulting in a double six.

Independence

An important part of every Bernoulli trial is that each action must be independent. That means the probabilities must remain the same throughout the trials; each event must be completely separate and have nothing to do with the previous event.

Winning a scratch off lottery is an independent event. Your odds of winning on one ticket are the same as winning on any other ticket. On the other hand, drawing lotto numbers is a dependent event. Lotto numbers come out of a ball (the numbers aren’t replaced) so the probability of successive numbers being picked depends upon how many balls are left; when there’s a hundred balls, the probability is 1/100 that any number will be picked, but when there are only ten balls left, the probability shoots up to 1/10. While it’s possible to find those probabilities, it isn’t a Bernoulli trial because the events (picking the numbers) are connected to each other.

The Bernoulli process leads to several probability distributions:

The Bernoulli distribution is closely related to the Binomial distribution. As long as each individual Bernoulli trial is independent, then the number of successes in a series of Bernoulli trails has a Binomial Distribution. The Bernoulli distribution can also be defined as the Binomial distribution with n = 1.

Use of the Bernoulli Distribution in Epidemiology

In experiments and clinical trials, the Bernoulli distribution is sometimes used to model a single individual experiencing an event like death, a disease, or disease exposure. The model is an excellent indicator of the probability a person has the event in question.

- 1 = “event” (P = p)

- 0 = “non event” (P = 1 – p)

Bernoulli distributions are used in logistic regression to model disease occurrence.

References

Evans, M.; Hastings, N.; and Peacock, B. “Bernoulli Distribution.” Ch. 4 in Statistical Distributions, 3rd ed. New York: Wiley, pp. 31-33, 2000.

WSU. Retrieved Feb 15, 2016 from: www.stat.washington.edu/peter/341/Hypergeometric%20and%20binomial.pdf