What is Agglomerative Clustering?

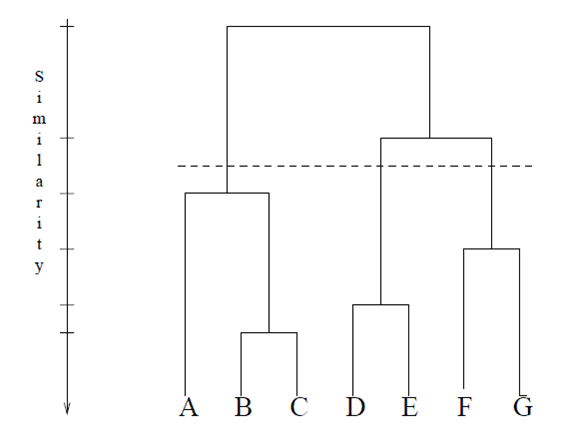

Agglomerative clustering (also called (Hierarchical Agglomerative Clustering, or HAC)) is a “bottom up” type of hierarchical clustering. In this type of clustering, each data point is defined as a cluster. Pairs of clusters are merged as the algorithm moves up in the hierarchy.

The majority of hierarchical clustering algorithms are Hierarchical Agglomerative Clustering algorithms.

Agglomerative Clustering Methods

In agglomerative clustering, each document is treated as a single cluster at the beginning of the algorithm. After that, clusters can be combined through a variety of methods. They all involve calculating dissimilarities between objects; Exactly how that dissimilarity is calculated can vary. The most commonly used are:

- Single Linkage: similarity is calculated for the closest pair. One drawback is that groups with close pairs can merge sooner than is optimal, even if those groups have overall dissimilarity.

- Complete Linkage: calculates similarity of the farthest away pair. One disadvantage to this method is that outliers can cause less-than-optimal merging.

- Average Linkage, or group linkage: similarity is calculated between groups of objects, rather than individual objects.

- Centroid Method: each iteration merges the clusters with the most similar centroid. A centroid is the average of all points in the system.

- Ward’s Method (ANOVA based): At each step, the process makes a new cluster that minimizes variance, measured by an index called E (also called the sum of squares index).

With all the methods, the pairing process continues until all items are merged into a single cluster. Often, you may want to try several methods and compare the results to decide which is the most appropriate clustering for your application. There is no uniformly best method.

Disadvantages

One downside of HACs is that they have large storage requirements, and they can be computationally intensive. This is especially true for big data. These complex algorithms are about four times the size of the K-means algorithm. Also, merging can’t be reversed, which can create a problem if you have noisy, high-dimensional data.

References

Aggarwal, C. (2013). Data Clustering: Algorithms and Applications (Chapman & Hall/CRC Data Mining and Knowledge Discovery Series Book 31). Chapman & Hall/CRC.