Probability > Posterior Probability & the Posterior Distribution

What is Posterior Probability?

Prior probability is an estimate of the likelihood that something will happen, before any new evidence has been included. Posterior probability adds a layer to this by factoring in subsequent data and adjusting your prior belief accordingly – it’s effectively like taking another look with fresh eyes.

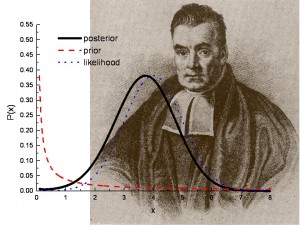

Posterior probability = prior probability + new evidence (called likelihood).

To demonstrate how posterior probability works, imagine you are attempting to ascertain what proportion of college students finish their degree within six years. Historical records inform your prior probability at 60%. After surveying more recent graduates however, the figure may be closer to 50% – representing an adjustment via posterior probability.

Origin of the Terms

Understanding the terms posterior and prior depends on being familiar with Latin philosophical terminology – specifically, a priori. This phrase describes an intuitive knowledge that comes from understanding how principles work, rather than simply relying on observation of their results. By comparison, its opposite “a posteriori” emphasizes what can be determined through direct experience or observation alone.

What is a Posterior Distribution?

Bayesian Analysis is an important tool for quantifying uncertainty, and the posterior distribution lies at its heart. Given prior information combined with data from observations or experiments, the posterior summarizes all you know after factoring in that new evidence. It provides estimates of parameters like intervals or points as well as predictions about future data outcomes through probabilistic evaluations to help inform decisions under uncertain conditions.