Phase Lag Definition

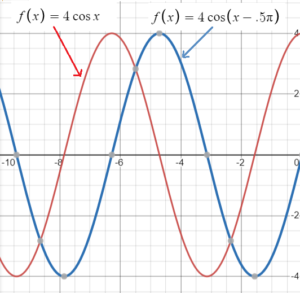

A phase lag represents a “shift” of a sinusoidal wave from zero phase.

A phase measures the relative time difference between two sinusoidal functions. The phase lag is one component of these functions and occurs when a system response lags behind a signal; it is a negative phase shift, when a waveform is delayed relative to another waveform. Its counterpart, a phase lead, is when a waveform is advanced relative to another.

A phase-lag (or lowpass) system has a sinusoidal input that creates a sinusoidal output with a phase lag.

Phase Lag Notation and Values

The formula for a sinusoidal function is [1]

f(t) = A cos (ω t – Φ)

Where:

- Φ is the phase lag, which is usually positive.

- ω is the phase shift.

Not all conventions agree with this particular phase lag definition or notation. Some conventions call for Φ to represent the phase shift instead of the phase lag. Other conventions call for the phase lag to always be negative; that implies that the process results in a delay rather than an acceleration [2]. Double check with your text or professor as to what convention you should use.

Generally speaking then, the phase lag is usually between 0 and 2π, where the ratio Φ / 2π is the fraction of a full period that the sinusoidal function is shifted to the right, relative to cosine. The phase lag can also be defined as the value of ω t that maximizes the graph of the function [3]. In other words, we can say that phase lag is a function of Ω:

- If Φ = 0, the graph has the position of ω t,

- If Φ = π/2 the graph has the position of sin (ω t).

Phase Lag Definition: References

Graphs: Desmos.com.

[1] MIT. Sinusoidal Solutions.

[2] MIT. Sinusoidal Functions. Retrieved November 12, 2021 from: https://ocw.mit.edu/courses/mathematics/18-03sc-differential-equations-fall-2011/unit-i-first-order-differential-equations/sinusoidal-functions/MIT18_03SCF11_s7_1text.pdf

[3] Module 7.1: Frequency Response.