Statistics Definitions > Orthogonality

What is Orthogonality in Statistics?

Simply put, orthogonality means “uncorrelated.” An orthogonal model means that all independent variables in that model are uncorrelated. If one or more independent variables are correlated, then that model is non-orthogonal.

In calculus-based statistics, you might also come across orthogonal functions, defined as two functions with an inner product of zero. They are particularly useful for finding solutions to partial differential equations like Schrodinger’s equation and Maxwell’s equations.

Running Tests with Orthogonality

Orthogonality also makes a difference in how statistical tests are run. Orthogonal models only have one way to estimate model parameters and to run statistical tests. Non-orthogonal models have several ways to do this, which means that the results can be more complicated to interpret. In general, more correlation between independent variables means that you should interpret result more cautiously.

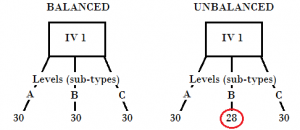

Whether a model is orthogonal or non-orthogonal is sometimes a judgment call. For example, let’s say you had four cells in an ANOVA: three cells have 10 subjects and the fourth cell has 9 subjects. Technically this is a non-balanced (and therefore non-orthogonal) design. However, the missing subject in one cell will have very little impact on results. In other words, you can treat this semi-unbalanced design as orthogonal.

Roots in Matrix Algebra

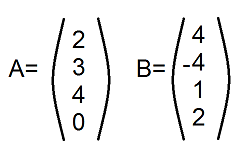

The term orthogonality comes from matrix algebra. Two vectors are orthogonal if the sum of the cross-element products is zero. For example the cross products of these two vectors is zero:

2(4) + 3(-4) + 4(1) + 0(2) = 0.

(See: matrix multiplication for why this works.)

The same concept (i.e. matrix multiplication) can be applied to cells in a two way table. Orthogonality is present in a model if any factor’s effects sum to zero across the effects of any other factors in the table.

References

Glen, S. (2020). Orthogonal Functions.