Contents:

- What is Bayesian Statistics?

- Bayesian vs. Frequentist

- Important Concepts in Bayesian Statistics

- Related Articles

1. What is Bayesian Statistics?

Bayesian statistics (sometimes called Bayesian inference) is a general approach to statistics which uses prior probabilities to answer questions like:

- Has this happened before?

- Is it likely, based on my knowledge of the situation, that it will happen?

Prior probability is a probability distribution that summarizes established beliefs about an event before (i.e. prior to) new evidence is considered. When the new evidence is added, the new distribution is called posterior probability. The probabilities, which you can think of as degrees of belief, are called Bayesian probabilities. For some examples of Bayes probability, see:

- Inverse Probability (which is another name for Bayes probability)

- Bayes Theorem Problems (some step-by-step examples of using Bayes Theorem)

Bayesian statistics is named after English statistician Thomas Bayes (1701–1761).

2. Bayesian vs. Frequentist

The opposite of Bayesian statistics is frequentist statistics —the type of statistics you study in an elementary statistics class. In elementary statistics, you use rigid formulas and probabilities. Bayesian probabilities are a lot more flexible.

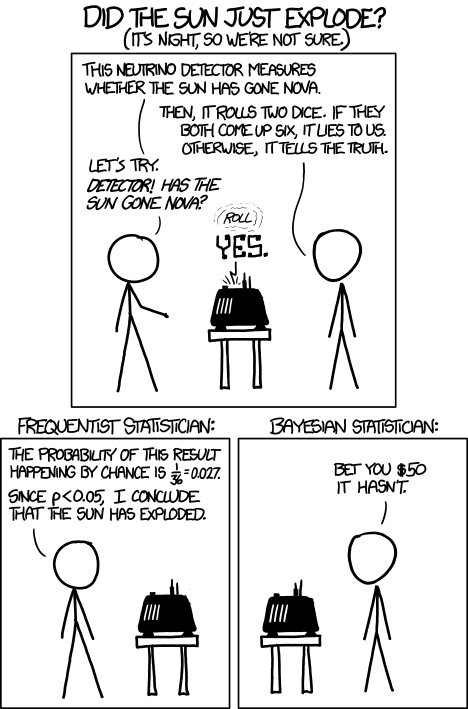

In a nutshell, frequentists use narrowly defined sets of formulas, tables, and solution steps. They are the grammar nazis of the probability world. On the other hand, Bayesian probability adds a few unknowns to the mix. The technical differences between the two approaches are not very intuitive, but this XKCD comic does a great job of summing them up:

3. Important Concepts in Bayesian Statistics

- Approximate Bayesian Computation (ABC): This set of techniques starts with a set of known summary statistics. A second set of the same statistics is calculated from a variety of potential models, and the candidates are placed in an acceptance/rejection loop. ABC favors those candidates that more closely match the known summary statistics (Medhi, 2014).

- Admissible decision rule: A decision rule is a guideline to help you support or reject a null hypothesis. Generally, speaking, a decision rule is “admissible” if it is better than the set of all other possible decision rules. It’s similar to the line of best fit in regression analysis: it’s not a perfect fit, but it’s “good enough.”

- Bayesian efficiency: An efficient design requires you to input parameter values; In a Bayesian efficient model you have to take your “best guess” at what those parameters might be. Figuring out what is an efficient design (and what isn’t) by hand is only possible for very small designs, as it’s a computationally complex process (Hess & Daly, 2010).

- Bayes’ theorem: see Bayes Theorem Problems

- Bayes factor: the Bayes factor is a measure of relative likelihood between two hypotheses, or what Cornfield (1976) calls the “relative betting odds.” The factor ranges between zero and infinity, where values close to zero are evidence against the null hypothesis and evidence for the alternate hypothesis (Spiegelhalter, D. et. al, 2004).

- Bayesian network: A directed acyclic graph that represents a set of variables and their associated dependencies.

- Bayesian linear regression: treats regression coefficients and errors as random variables, instead of fixed unknowns. This tends to make the model more intuitive and flexible. However, the results are similar to simple linear regression if priors are uninformative and N is much greater than P (i.e. when the number of items is much greater than the number of prior distributions).

- Bayesian estimator: Also called a Bayes action, the Bayes estimator is defined as a minimizer of Bayes risk. In more general terms, it’s a single number that summarizes information found in a prior distribution about a particular parameter.

- Bayesian Information Criterion (also called the Schwarz criterion): given a set of models to choose from, you should choose the model with the lowest BIC.

- Bernstein–von Mises theorem: This is the Bayesian equivalent of the asymptotic normality results in the asymptotic theory of maximum likelihood estimation (Ghosh & Ramamoorthi, 2006, p.33).

- Conjugate prior: A conjugate prior has the same distribution as your posterior prior. For example, if you’re studying people’s weights, which are normally distributed, you can use a normal distribution of weights as your conjugate prior.

- Credible interval: a range of values where an unobserved parameter falls with a certain subjective probability. It is the Bayesian equivalent of a confidence interval in frequentist statistics.

- Cromwell’s rule: This simple rule states that you should not assign probabilities of 0 (an event will not happen) or 1 (an event will happen), except when you can demonstrate an event is logically true or false. For example, the event 5 + 5 will logically add up to 10, so you can apply a probability of 1 to it.

- Empirical Bayes method: a technique where the prior distribution is estimated from actual data. This is unlike the usual Bayesian methods, where the prior distribution is fixed at the beginning of an experiment.

- Hyperparameter: a parameter from the prior distribution that’s set before the experiment begins.

- likelihood function A measurement of how well the data summarizes these parameters. See: What is the Likelihood function?

- Maximum a posteriori estimation: An estimate of an unknown; It is equal to the mode of the posterior distribution.

- Maximum entropy principle: This principle states that if you are estimating a probability distribution, you should select the distribution which gives you the maximum uncertainty (entropy).

- Posterior probability: Posterior probability is the probability an event will happen after all evidence or background information has been taken into account. See: What is Posterior Probability?

- Principle of indifference: states that if you have no reason to expect one event will happen over another, all events should be given the same probability.

Related Articles

- Bayesian Hypothesis Testing

- Fisher Information

- Inverse Probability

- Likelihood Function

- Posterior Distribution Probability

- Principle of Indifference.

References

Ghosh, J. & Ramamoorthi, R. (2006). Bayesian Nonparametrics. Springer Science and Business Media.

Hess, S. & Daly, A. (2010). Choice Modelling: The State-of-the-art and the State-of-practice – Proceedings from the Inaugural International Choice Modelling Conference. Emerald Group Publishing.

Medhi, K. (Ed.) (2014). Encyclopedia of Information Science and Technology, Third Edition. IGI Global.

MIT Course Notes. Chapter 10: Principle of Maximum Entropy. Retrieved February 19, 2018 from:

https://mtlsites.mit.edu/Courses/6.050/2003/notes/chapter10.pdf

Spiegelhalter, D. et. al, (2004). Bayesian Approaches to Clinical Trials and Health-Care Evaluation. John Wiley and Sons.