What is Relative Absolute Error?

Relative Absolute Error (RAE) is a way to measure the performance of a predictive model. It’s primarily used in machine learning, data mining, and operations management. RAE is not to be confused with relative error, which is a general measure of precision or accuracy for instruments like clocks, rulers, or scales.

The Relative Absolute Error is expressed as a ratio, comparing a mean error (residual) to errors produced by a trivial or naive model. A reasonable model (one which produces results that are better than a trivial model) will result in a ratio of less than one.

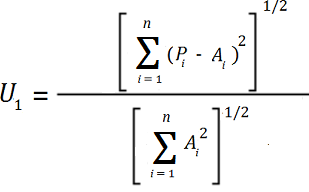

An early RAE is Thiel’s U, a ratio that is a measure of either forecast accuracy (Theil, 1958, pp 31-42) or forecast quality (Theil, 1966, chapter 2).

Operations Management Example

In operations management, RAE is a metric comparing actual forecast error to the forecast error of a simplistic (naive) model. As a formula, it’s defined (Hill, 2012) as:

RAE = mean of the absolute value the actual forecast errors / mean of the absolute values of the naive model’s forecast errors.

A good forecasting model will produce a ratio close to zero; A poor model (one that’s worse than the naive model) will produce a ratio greater than one.

References

Cichosz, P. (2014). Data Mining Algorithms: Explained Using R. John Wiley and Sons.

Hill, A. (2012). The Encyclopedia of Operations Management: A Field Manual and Glossary of Operations Management Terms and Concepts. FT Press.

Theil, H. (1958), Economic Forecasts and Policy. Amsterdam: North Holland.

Thiel, H. (1966), Applied Economic Forecasting. Chicago: Rand McNally.